Virtualisation with Ceph and OpenNebula

Matthew Richardson

March 2013

What do we want?

Management-Speak

"A highly available virtualisation pool that copes with hardware failure and service interruption, integrating aspects of autonomic decision-making, using configuration management for both service and guest deployment, whilst remaining performant and cost-effective in comparison to physical hardware solutions."

Availability

- Guests should always be running

- Migrations should be live

- No scheduled downtime

Redundancy

- Hosts go down

- Disks fail

- Guests crash

Flexibility

- Load balancing, monitoring, scheduling

- Scaling must be easy

- Automate as much as possible

- Manual control available if needed

How do we get it?

Virtualisation

Libvirt & qemu/KVM

- Handles live migrations

- Hugely configurable

- Guest OS agnostic

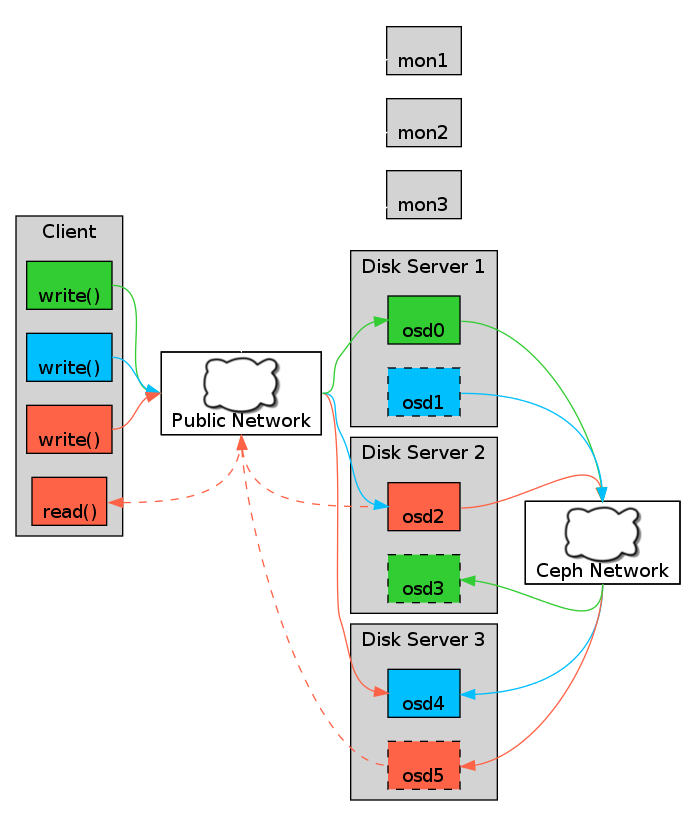

Storage

(rbd)

- Network block devices

- Distributed & replicated

- Scaleable (automatically)

- Sparse disk images

- qemu integration

- ...

Ceph in Action

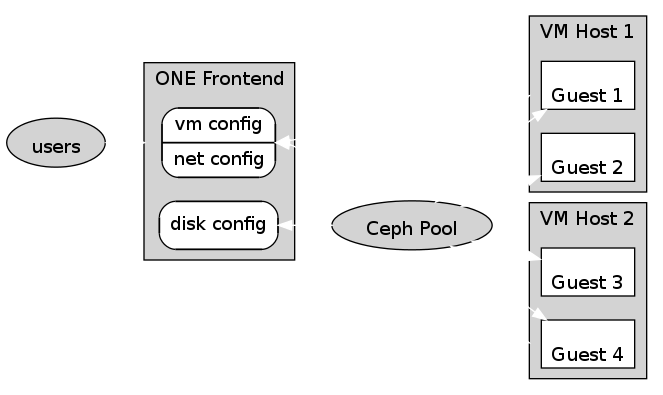

Cluster Management

- 'Cloud Management Platform'

- Controls Resources:

- VM Hosts

- Disk Images

- Guest VMs

- Guest networking

- Done with libvirt & ceph integration

Useful Features

- Relatively simple to set up

- Resource creation from templates

- Failure monitoring & hooks

- Load-balancing & guest placement

- Can be turned off

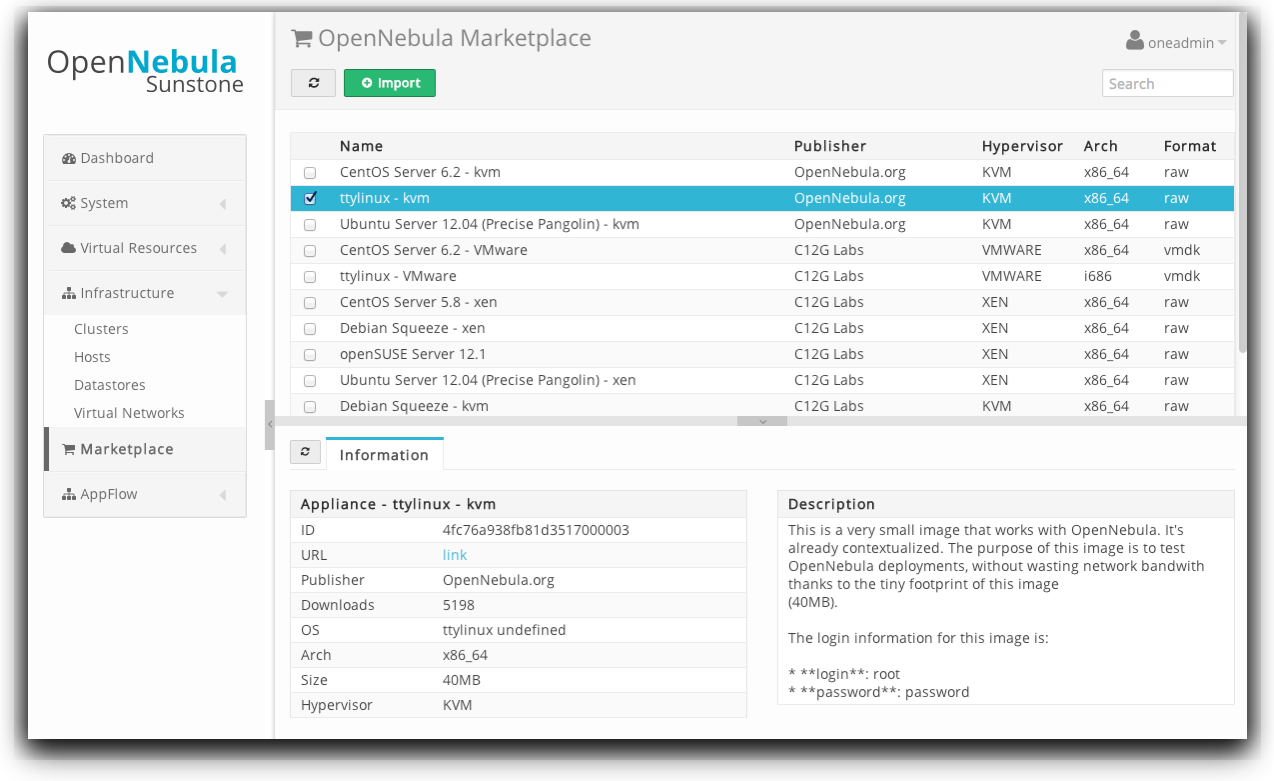

OpenNebula in action

Management Tools

OpenNebula Sunstone

Command-line

- Similar functionality to Sunstone

- Scriptable, parseable

- 'Personal preference'

LCFG

- Component:

- Resource creation (& deletion)

- ONEDB backup and restore methods

- External inventory integration

Demo (?)

opennebula

LCFG

vmhost 1

vmhost 2

guest VM

Future Work

- Advanced guest placement

- More clusters: research, teaching, development

- Wider use: shared LCFG config

- Use a different Management Platform?

Any Questions?

m.richardson@ed.ac.uk